Excel is an excellent tool for analyzing data. With data in Excel, you can chart, sort, apply filters, implement grouping with outlining, use pivot tables reports, build regression models, and more. But first you need to get data into Excel and this can be costly and time consuming.

1.Create a Word file, paste the web URL. 2.Save the Word as.iqy with.txt format. 3.Choose MS-DOS as coding. 4.Create an Excel file and click 'Data Get External Data Run Web Query (Or Run Saved Query) ' of the Bar. Besides, here is Tool for capturing web page for you to use. Excel provides a very useful option to gather data from websites, called web queries. These have been introduced with Excel 97 and have been further enhanced in the versions released after Excel 97. This article shows how you can setup a web query so that you can make the worksheet dynamically update based on values in so called parameter cells. Choose the File menu's New command to open a blank workbook. You need to place query results. Making and using Excel Web Queries Your.iqy web query files go into this folder: The User Library folder is Hidden by Apple. Unhide it (these directions work with all current versions of Mac OS).

This article explains several ways of extracting web page data and importing it into Excel.

Getting external data using Excel Web Query

Web Queries are an easy, built-in way to bring data into Microsoft Excel from the Web. You can point a Web query at an HTML document that resides on a Web server and pull part or all of the contents into your spreadsheet. You can use a Web query to retrieve refreshable data that is stored on your intranet or the Internet, such as a single table, multiple tables, or all of the text on a Web page. Then you can analyze the data by using the tools and features in Excel.

Web Queries actively use table elements inside the HTML of the page to specify the content to extract. Unfortunately, the dependence on table elements is Achilles' heel of Web Queries. Table-based web page design used to be very popular when Web Queries were first introduced in Excel 97. Nowadays table-based layout is considered obsolete and inefficient. It has been almost completely replaced by Cascading Style Sheets (CSS). Web Queries do not recognize structured data presented using CSS. Web Queries also have other limitations:

- They have no support for client-side scripting.

- You can import data from only one URL per query.

- There's no frames support. For parameterized queries, you must be prepared to create or edit an iqy file.

- Sites requiring authentication and passwords provide additional challenges. They may require coded workarounds or may be unsolvable.

- Web Queries lack basic automation like processing multi-page tables or loading data from details pages linked to a primary table.

Advanced Excel Web Query

Microsoft Research Labs has created an Excel 2007 Web Data Add-In that improves an Excel's built-in functionality for importing data from web pages. The add-in plugs into Excel 2007 with its entry point located on the Data Tab under the From Web option. The system extracts data by learning from a user's selection of data they wish to capture into Excel. The Add-In can be downloaded from Microsoft Research Labs.

This add-in is a research beta and is unsupported by Microsoft. There is no much documentation and the program is functional but uncomlete.

Web scraping using XPath and VBA

XPath is a XML query language that can be used to locate a specific part of an XML document. A common approach taken by many web scrapers is to parse an HTML document into an XML document and then use XPath to query the document. A typical XPath expression looks like /html/body/form/div/div/div[7]/div[3]/div[3]/div/h2/span[2]/span. It can be obtained using, for example, Firebug add-on and Firefox browser. Having the expression you can use VBA and the Microsoft Internet Control to navigate to the target webpage and scrape the needed data.

Instead of the Microsoft Internet Control you can download an open source library Selenium VBA, which is a Windows COM library that uses the popular Selenium web testing tool. The library makes it possible to automate web browsing using Excel and VBA code or using a simple VBS file executed by double-clicking on it. Mts 458 controller manual transfer switch. User's actions can be recorded using the Firefox plugin named 'Selenium IDE' and translated to VBA or VBS with the provided formatters.

Getting external data from a web page using a web scraping tool

The limitations of Web Queries can be overcome by web data extraction software also known as web scraping software. Data Toolbar is a browser automation tool that provides such functionality. To prepare a web data extraction project a user specifies a starting URL, a crawling rule and content or page HTML elements to collect. The program then goes through all available pages and collects data and images as a human would do manually using mouse clicks and copy-and-paste. Hiveswap: act 2 soundtrack edition download for mac osx.

Microsoft Research Labs has created an Excel 2007 Web Data Add-In that improves an Excel's built-in functionality for importing data from web pages. The add-in plugs into Excel 2007 with its entry point located on the Data Tab under the From Web option. The system extracts data by learning from a user's selection of data they wish to capture into Excel. The Add-In can be downloaded from Microsoft Research Labs.

This add-in is a research beta and is unsupported by Microsoft. There is no much documentation and the program is functional but uncomlete.

Web scraping using XPath and VBA

XPath is a XML query language that can be used to locate a specific part of an XML document. A common approach taken by many web scrapers is to parse an HTML document into an XML document and then use XPath to query the document. A typical XPath expression looks like /html/body/form/div/div/div[7]/div[3]/div[3]/div/h2/span[2]/span. It can be obtained using, for example, Firebug add-on and Firefox browser. Having the expression you can use VBA and the Microsoft Internet Control to navigate to the target webpage and scrape the needed data.

Instead of the Microsoft Internet Control you can download an open source library Selenium VBA, which is a Windows COM library that uses the popular Selenium web testing tool. The library makes it possible to automate web browsing using Excel and VBA code or using a simple VBS file executed by double-clicking on it. Mts 458 controller manual transfer switch. User's actions can be recorded using the Firefox plugin named 'Selenium IDE' and translated to VBA or VBS with the provided formatters.

Getting external data from a web page using a web scraping tool

The limitations of Web Queries can be overcome by web data extraction software also known as web scraping software. Data Toolbar is a browser automation tool that provides such functionality. To prepare a web data extraction project a user specifies a starting URL, a crawling rule and content or page HTML elements to collect. The program then goes through all available pages and collects data and images as a human would do manually using mouse clicks and copy-and-paste. Hiveswap: act 2 soundtrack edition download for mac osx.

Data Toolbar enhances Web Query engine by providing an automated data extraction tool that supports flexible CSS based content, client-side scripting, password protected web sites and complex navigation rules. The tool converts complex web sites to a plain CSV file or other format compatible with Excel. Instead of quering a web page directly the Excel external range query should be pointed to the output file of the scraper. That simple approach does not require any programming against the web site HTML.

For example, suppose you need to import product price information from Ebay into Excel. Excel is not able to query Ebay web pages directly because these pages do not contain any table elements. Data Toolbar can help you resolve that problem. Use the following steps to get external data from a web page:

- Start your browser and use Data Toolbar to specify content to extract and navigation rules.

- Run the DataTool crawler and save extracted content as an HTML table.

- Switch to Excel and open the Data tab. In the Get External Data group, click From Web.

- In the New Web Query dialog box specify the location of the local HTML file generated by the scraper.

- Select data by clicking on the yellow icon in the left top corner of the table and click the Import button.

- Specify where you want to put data, auto refresh and formatting properties.

- If you need to refresh the Excel data later on, rerun DataTool scraper and save its output to the same location. Excel can automatically detect changes and update its external data range. The Data Tool can be scheduled for automatic data collection to keep the local file up-to-date.

Excel Mac Web Query

You can find more details on working with external data sources in the Get external data from a Web page article on the Microsoft Office web site.

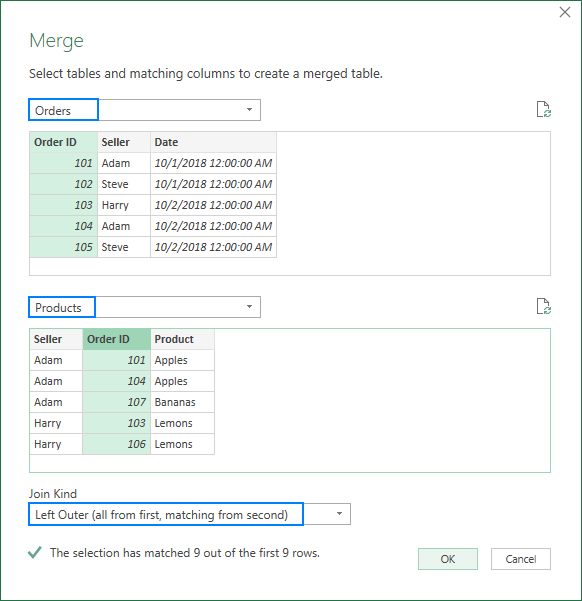

How To Merge Queries Excel

Data Toolbar works with Chrome, Firefox and Internet Explorer. Start with Data Toolbar Free Edition. The free version has the same functionality as the full version but its output is limited to 100 rows. There is no expiration date or any other limitations. Full feature version is only $24.